My homelab is a playground for curiosity. After deploying K3s on my Raspberry Pi cluster, I was drawn to the buzz around Talos Linux. An immutable, API-managed, and security-hardened OS designed exclusively for Kubernetes? I had to try it.

After spinning up a Talos cluster, the inevitable happened: new versions of both Kubernetes and Talos were released. While the manual upgrade path is well-documented, I craved a more streamlined management experience. This led me to Sidero Labs Omni, a management platform designed to create, manage, and upgrade Talos clusters with ease.

The official documentation provides a straightforward guide for deploying Omni on-premises using Docker Compose. But where’s the fun in that? I already have a perfectly good Kubernetes cluster running. My goal became clear: deploy Omni on Kubernetes using its Helm chart, even if the path was less traveled.

This post is the guide I wish I had. We’ll walk through deploying Omni on an existing Kubernetes cluster, navigating the OCI-based Helm chart, and tackling the undocumented hurdles I discovered along the way. Crucially, Sidero Labs provides container images for both amd64 and arm64 architectures, which made this whole adventure on my Raspberry Pi cluster possible.

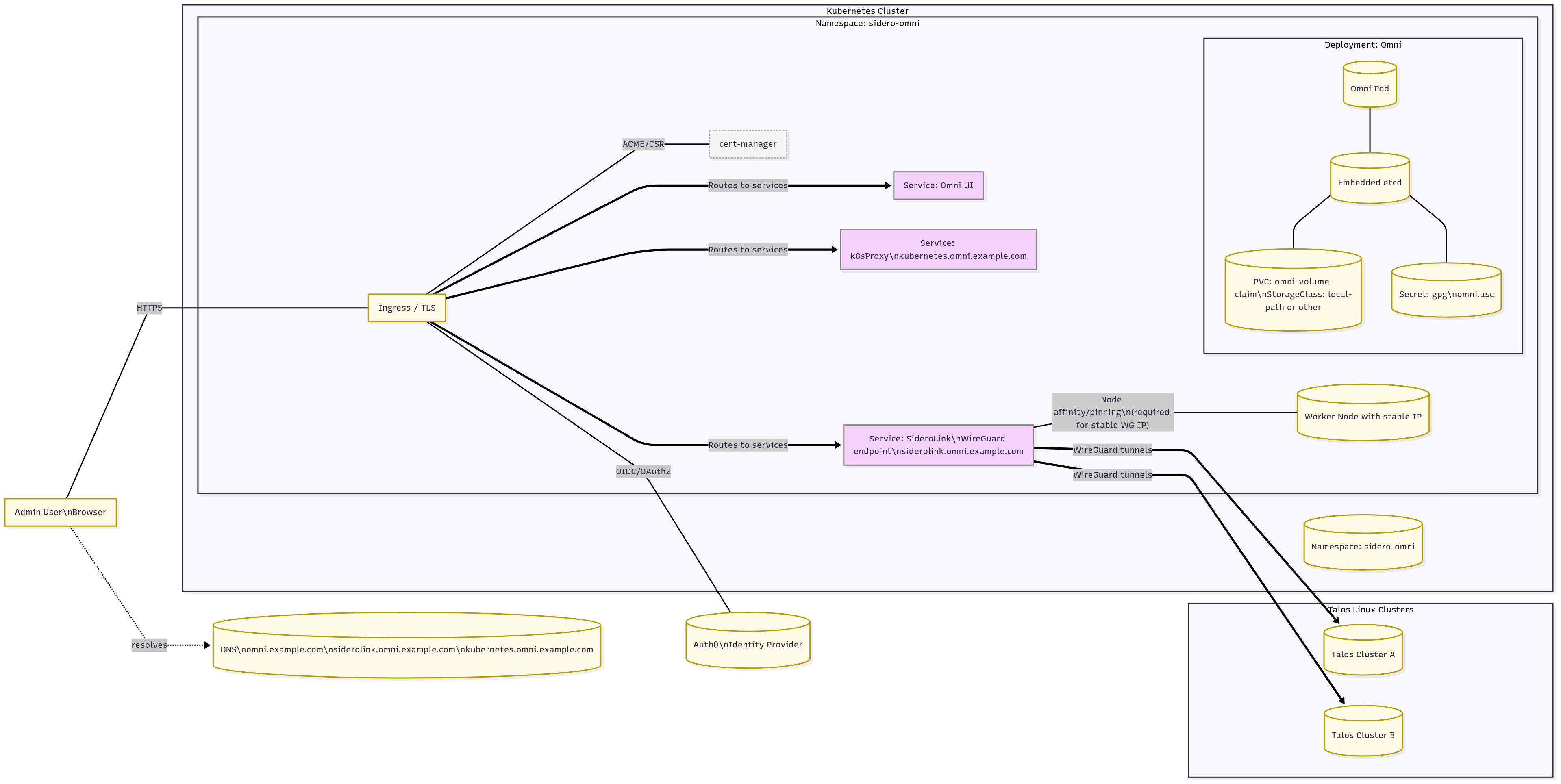

Architecture view

Laying the Groundwork: Prerequisites

Before we can unleash the Helm chart, we need to prepare our cluster. Omni has a few specific requirements for storage, secrets, and configuration.

1. Namespace

First, let’s give Omni a dedicated home in our cluster.

apiVersion: v1

kind: Namespace

metadata:

name: sidero-omni

labels:

name: sidero-omni

2. Persistent Storage for etcd

Omni uses an embedded etcd to store its state. We need to provide it with persistent storage. I’m using the local-path provisioner that comes with K3s, but you can substitute this with any StorageClass available in your cluster.

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: omni-volume-claim

namespace: sidero-omni

spec:

storageClassName: local-path

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

3. etcd Encryption Key

For security, etcd’s data must be encrypted. Following the official documentation, you’ll generate a GPG key. Once you have the omni.asc file, create a Kubernetes secret from it.

kubectl create secret generic gpg -n sidero-omni --from-file=omni.asc=./omni.asc

4. Authentication Setup

Omni needs an identity provider. I opted for Auth0, following the setup guide in the documentation. Keep your Auth0 Domain and Client ID handy; you’ll need them for the values.yaml file.

The Deployment: Crafting the values.yaml

The Omni Helm chart is published as an OCI artifact on GitHub’s container registry, which is a modern approach but can be tricky. The key to a successful installation is a well-configured values.yaml file.

Here is the configuration I used, with explanations for the important bits.

# The domain where the Omni UI will be accessible.

domainName: omni.example.com

# A unique ID for your Omni account. Generate one with `uuidgen`.

accountUuid: 8CD3421D-2AE8-40DB-A1EF-0D112A385816

deployment:

image: ghcr.io/siderolabs/omni

tag: "v1.1.5" # Pin to a specific version for predictable deployments

name: "MyOmniInstance"

auth:

auth0:

clientId: <AUTH0_CLIENT_ID>

domain: <AUTH0_DOMAIN>

# The email address of the first user, who will be granted admin rights.

initialUsers:

- awesome.omni@gmail.com

service:

siderolink:

domainName: siderolink.omni.example.com

wireguard:

# This is a critical setting! The pod must be bound to a node with a stable IP.

address: <IP address of worker node>

k8sProxy:

domainName: kubernetes.omni.example.com

volumes:

etcd:

# Use the PVC we created earlier.

persistentVolumeClaimName: omni-volume-claim

gpg:

# Use the GPG secret we created.

secretName: gpg

And now the command to install all of there:

helm -n sidero-omni install oci://ghcr.io/siderolabs/charts/omni --version 0.0.3 -f values.yaml

The Gotchas: Post-Installation Tuning

Getting the pod running is only half the battle. Here are a couple of crucial details I learned that aren’t immediately obvious from the documentation.

Node Affinity: The service.wireguard.address setting is critical. It requires the Omni pod to run on a specific node so it can have a predictable IP for the WireGuard tunnel to the Talos clusters. However, the Helm chart doesn’t provide a way to set a nodeSelector or affinity rule. If you have a multi-node cluster, you must manually patch the deployment after installation to ensure the pod is always scheduled to the correct node.

Exposing Omni with Ingress: The service is running, but you can’t access it from your browser yet. The final step is to create Ingress resources to expose the Omni UI and its related services. The chart’s documentation on GitHub provides the necessary examples. I use cert-manager to automatically provision TLS certificates for my Ingresses, and I highly recommend it for a secure setup.

And there you have it. While deploying Omni on Kubernetes via its Helm chart is certainly more involved than the documented Docker path, the result is a robust, scalable, and integrated management plane for your Talos clusters. You’ve traded a bit of initial complexity for a more powerful, Kubernetes-native setup. Happy cluster managing!