Navigating Kubernetes networking in an on-premises environment can be confusing, especially when it comes to exposing services. Many guides point toward solutions involving external load balancers like HAProxy, Nginx, or even dedicated hardware from F5. But what if you could achieve a fully functional, highly available setup without that extra layer of complexity? In this post, I’ll show you exactly how to do that, covering robust access for both the Kubernetes API server and the applications running inside your cluster.

The principles we’ll cover apply to any Kubernetes flavor, whether you’re running vanilla Kubernetes or a lightweight distribution like K3s. Our setup will tackle high availability in two distinct parts: one solution for the control plane’s Kube API and another for the application workloads. You might wonder why we’re using two separate tools. It comes down to a clear separation of concerns — a core part of my opinionated approach to building resilient on-prem clusters.

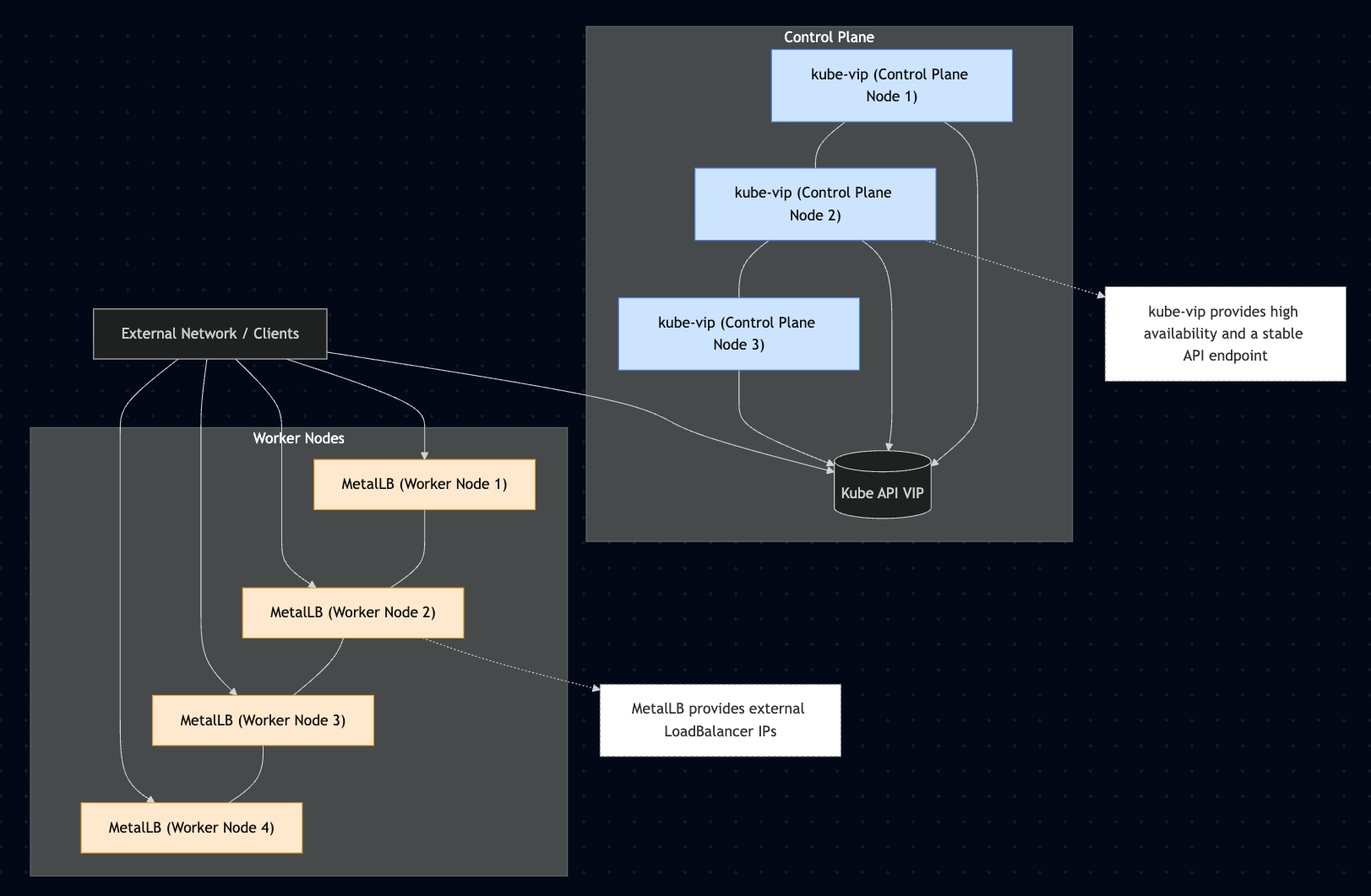

For the control plane, we need a stable entry point. My choice for this is the kube-vip project. It’s a lightweight and powerful solution for creating a highly available Kubernetes control plane by advertising a virtual IP (VIP) on the network. This VIP is dynamically assigned to a healthy control plane node, ensuring the Kubernetes API server remains accessible through a stable endpoint even if a node goes down. Beyond the control plane, kube-vip can also manage VIPs for services of type LoadBalancer, effectively replacing the need for an external load balancer for your workloads, but in our opinionated setup we will use another solution for the workloads.

Before we dig into the technical details, here is a high-level diagram of the architecture described in this tutorial.

I tend to use the DaemonSet installation method for kube-vip, but Static Pods work just as well. The project’s documentation is quite self-explanatory. The most critical step is to ensure you add the virtual IP as an additional Subject Alternative Name (SAN) to the API server certificate.

Now that our control plane is highly available, let’s address the second part of our setup: a load balancer for the workloads. For this, I use the MetalLB project. MetalLB is a popular network load-balancer implementation for bare-metal Kubernetes clusters, filling a crucial gap for users not running on a cloud provider. It allows you to create services of type LoadBalancer and assigns them an IP address from a pre-configured pool. MetalLB operates in two primary modes: Layer 2 and BGP. In Layer 2 mode, it uses standard protocols like ARP to announce a service IP from a single node, with automatic failover. For more advanced scenarios, BGP mode allows MetalLB to peer with your network routers, enabling true multi-node load balancing and more resilient traffic distribution.

Since our setup is straightforward, we’ll use Layer 2 mode. After deploying MetalLB via its Helm chart or Kustomize manifests (don’t forget the CRDs!), we just need to create an IPAddressPool and a corresponding L2Advertisement to make it operational.

To summarize, by combining the strengths of kube-vip for control plane high availability and MetalLB for workload services, you can build a robust and resilient on-premises Kubernetes cluster without the need for external hardware or complex load balancing software. This dual approach provides a stable VIP for the Kubernetes API server, ensuring continuous management access, while also enabling standard LoadBalancer services for your applications, just as you would in a cloud environment. This demonstrates the power and flexibility of the open-source ecosystem, making production-grade, on-prem Kubernetes more accessible and cost-effective than ever before.